DFlash: Block Diffusion for Flash Speculative Decoding

In this work, we introduce DFlash, a method utilizing a lightweight block diffusion model for drafting in speculative decoding. This enables efficient and high-quality parallel drafting, pushing the limits of speculative decoding. DFlash achieves up to lossless acceleration for Qwen3-8B, nearly faster than the state-of-the-art speculative decoding method EAGLE-3.

Huggingface Models: [DFlash-Collection]

Quick Start

DFlash supports SGLang for production serving and Transformers for fast exploration. vLLM integration is in progress.

Installation

pip install "git+https://github.com/sgl-project/sglang.git@refs/pull/16818/head#subdirectory=python"Usage

python -m sglang.launch_server \

--model-path Qwen/Qwen3-Coder-30B-A3B-Instruct \

--speculative-algorithm DFLASH \

--speculative-draft-model-path z-lab/Qwen3-Coder-30B-A3B-DFlash \

--tp-size 1 \

--dtype bfloat16 \

--attention-backend fa3 \

--mem-fraction-static 0.75 \

--trust-remote-codeInstallation

pip install transformers==4.57.3 torch==2.9.1 accelerateUsage

from transformers import AutoModel, AutoModelForCausalLM, AutoTokenizer

# 1. Load the DFlash Draft Model

model = AutoModel.from_pretrained(

"z-lab/Qwen3-8B-DFlash-b16",

trust_remote_code=True,

dtype="auto",

device_map="cuda:0"

).eval()

# 2. Load the Target Model

target = AutoModelForCausalLM.from_pretrained(

"Qwen/Qwen3-8B",

dtype="auto",

device_map="cuda:0"

).eval()

# 3. Load Tokenizer and Prepare Input

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen3-8B")

prompt = "How many positive whole-number divisors does 196 have?"

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

enable_thinking=False

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# 4. Run Speculative Decoding

generate_ids = model.spec_generate(

input_ids=model_inputs["input_ids"],

max_new_tokens=2048,

temperature=0.0,

target=target,

stop_token_ids=[tokenizer.eos_token_id]

)

print(tokenizer.decode(generate_ids[0], skip_special_tokens=True))Why DFlash?

Autoregressive Large Language Models (LLMs) have transformed the AI landscape, but their sequential nature creates a bottleneck: inference is slow, and GPU compute is often under-utilized.

Speculative decoding addresses this bottleneck by using a small draft model to generate tokens that the target LLM verifies in parallel. While effective, state-of-the-art methods like EAGLE-3 still rely on autoregressive drafting. This serial drafting process is inefficient and prone to error accumulation, effectively capping speedups at roughly 2–3 for popular models like the Qwen3 series.

Diffusion LLMs (dLLMs) offer parallel text generation and bidirectional context modeling, presenting a promising alternative to autoregressive LLMs. However, current dLLMs still suffer from performance degradation compared to their autoregressive counterparts. Furthermore, their requirement for a large number of denoising steps to maintain generation quality limits their raw inference speed (d3LLM).

This presents a clear trade-off: AR models are performant but slow, while dLLMs allow for fast, parallel generation but often suffer from lower accuracy. Can we combine the strengths of both while avoiding their respective weaknesses? The natural solution is to utilize diffusion for drafting, taking advantage of parallelism, while relying on the AR model for verification.

How DFlash Works

However, using diffusion for drafting is non-trivial:

- Existing Diffusion Speculators are Impractical: Methods like DiffuSpec and SpecDiff-2 rely on massive 7B-parameter draft models. This high memory footprint makes them prohibitively expensive for real-world serving and limits speedups to ~3-4.

- Small Diffusion Models Don’t Work: Simply shrinking the diffusion drafter fails. We trained a lightweight 5-layer block diffusion model (block size 16) from scratch on data generated by Qwen3-4B and perform speculative decoding for Qwen3-4B on some math tasks. As shown in the table below, without additional help, the small model lacks the reasoning capability to align with the target, resulting in limited speedup.

| Temp | GSM8K Speedup / | Math500 Speedup / | AIME24 Speedup / | AIME25 Speedup / |

|---|---|---|---|---|

| 0 | 2.83 / 3.38 | 3.73 / 4.61 | 3.43 / 4.12 | 3.35 / 4.07 |

| 1 | 2.76 / 3.29 | 3.31 / 4.12 | 2.66 / 3.23 | 2.65 / 3.24 |

Is there no free lunch? Can we build a drafter that is both small (fast) and accurate (high acceptance)?

The Key Insight: The Target Knows Best

We demonstrate that a free lunch does exist. Our key insight is that the large AR target model’s hidden features implicitly contain information about future tokens, a phenomenon also observed by Samragh et al..

Instead of asking a tiny diffusion model to reason from scratch, DFlash conditions the draft model on context features extracted from the target model. This fuses the deep reasoning capabilities of the large model with the parallel generation speed of the small diffusion drafter.

Design

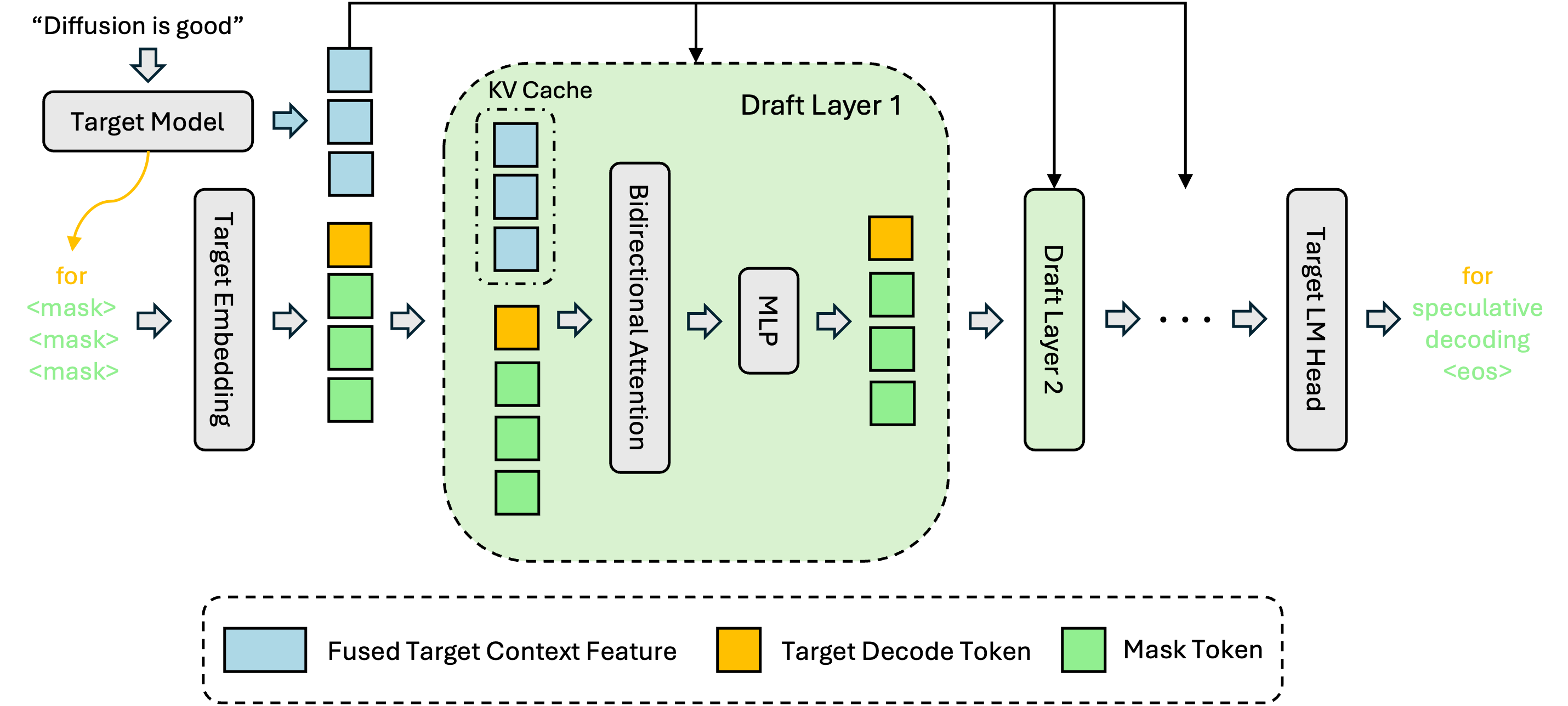

Figure 2 illustrate the system design of DFlash.

- Feature Fusion: After the prefill or verification steps, we extract and fuse the hidden features from the target model.

- Conditioning: These features are fed directly into the Key/Value (K,V) projections of the draft model layers and stored in the draft model’s KV cache.

- Parallel Drafting: Conditioned on this rich context (and the last verified token), the drafter predicts the next block of tokens in parallel using diffusion.

To minimize overhead, the draft model reuses the embedding and LM head layers from the target model, and only the intermediate layers are trained. We set the number of draft layers to 5, striking a balance between draft quality and speed.

Figure 2. The overall design of DFlash. We extract and fuse the hidden context features from the target model, feeding these features into each draft layer to perform conditional speculation.

Benchmark Results

We evaluate DFlash against EAGLE-3, the state-of-the-art speculative decoding method. For DFlash, the drafting block size is 16 and the number of denoising steps is 1. For EAGLE-3, we use the pretrained model RedHatAI/Qwen3-8B-speculator.eagle3 with a speculation length of 7. Check out our GitHub repository to reproduce the results.

7 5.6 4.2 2.8 1.4 0 | ||||||||||

| GSM8K | MATH-500 | AIME24 | AIME25 | HumanEval | MBPP | LiveCodeBench | SWE-Bench | MT-Bench | Alpaca |

Conclusion

DFlash demonstrates the promise of applying diffusion during the drafting stage of speculative decoding, pushing the speed limits of autoregressive LLMs. While dLLMs offer parallel generation, they often suffer from quality degradation compared to state-of-the-art autoregressive models. DFlash shows that by utilizing dLLMs strictly for drafting, we can fully leverage their parallel efficiency without sacrificing output quality. Crucially, by conditioning the drafter on context features from the capable target LLM, DFlash maintains high acceptance rates.

DFlash establishes a new direction for the development of diffusion LLMs. Rather than struggling to train dLLMs to match the accuracy of autoregressive models, we can instead deploy them as specialized drafters. This approach allows us to safely reduce the number of denoising steps, fully utilizing parallel generation, while relying on verification to prevent quality loss. Furthermore, training a lightweight dLLM for drafting requires significantly less compute than training a large, standalone dLLM.

We are currently working on integrating DFlash into popular serving frameworks. We also plan to support a wider range of models, including large MoE models. DFlash is compatible with various inference-time acceleration techniques for dLLMs, such as those introduced in DiffuSpec and SpecDiff-2, which can further improve speedups; we plan to support these integrations soon. The current results are based purely on block diffusion with a block size of 16.

Citation

@article{chen2026dflash,

title = {{DFlash: Block Diffusion for Flash Speculative Decoding}},

author = {Chen, Jian and Liang, Yesheng and Liu, Zhijian},

journal = {arXiv preprint arXiv:2602.06036},

year = {2026}

} Z Lab

Z Lab