SparseLoRA: Accelerating LLM Fine-Tuning with Contextual Sparsity

TL;DR: This paper introduces SparseLoRA, a method that uses contextual sparsity to accelerate LLM fine-tuning, cutting compute by up to and runtime by while maintaining model accuracy on reasoning, coding, instruction-following tasks and ARC-AGI.

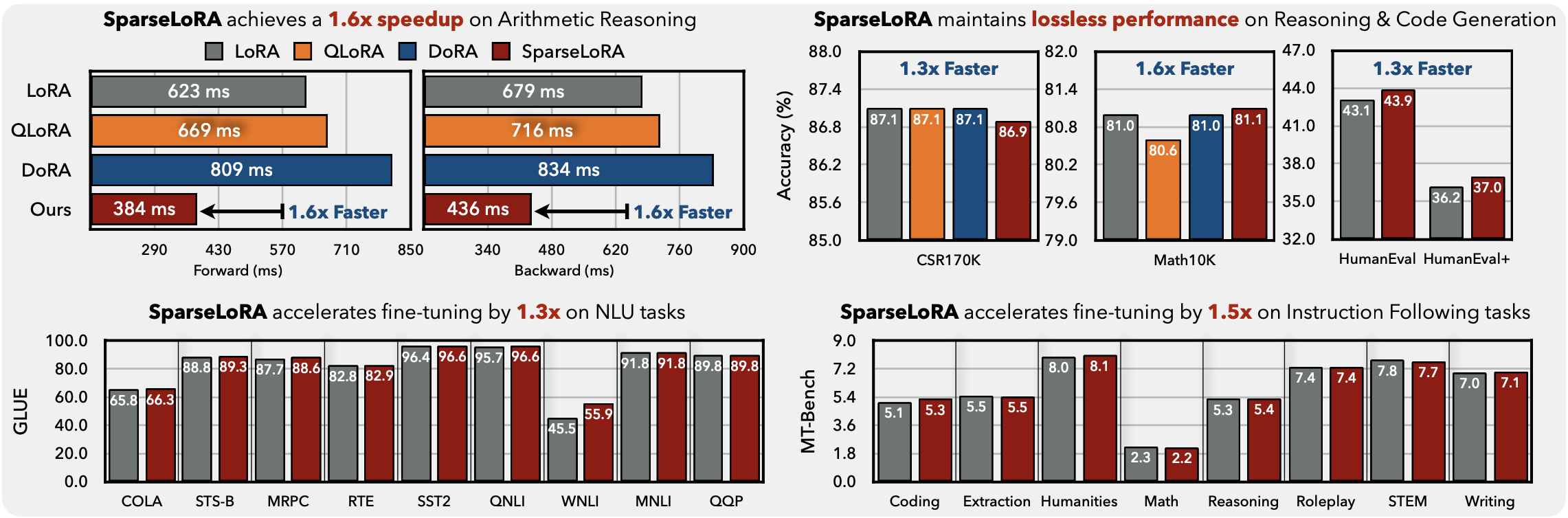

Figure 1: SparseLoRA leverages contextual sparsity to accelerate LLM fine-tuning. By dynamically identifying and pruning less important parameters during training, SparseLoRA achieves up to 2.2 times compute reduction and 1.5 times runtime speedup while maintaining model performance across reasoning, coding, and instruction-following tasks.

Quick Start for SparseLoRA

conda create -yn sparselora python=3.10

conda activate sparselora

bash scripts/environment_setup.shfrom transformers import AutoModelForCausalLM, Trainer

from peft import get_peft_model, LoraConfig

from spft.api import SPFTConfig, get_spft_model

##* Load LoRA + LLM

model = get_peft_model(model: AutoModelForCausalLM, lora_cfg: LoraConfig)

##* Load Sparse Fine Tuning Config

spft_config = SPFTConfig.from_file("configs/sparsity/llama3-8b-math10k.yaml")

##* Apply SparseLoRA Patches (SVD sparsity estimator & liger-kernel optimizations)

model = get_spft_model(model, spft_config)

##* Launch Sparse Training:

trainer = Trainer(

model=model,

...

)

trainer.train()Why We Built SparseLoRA

Fine-tuning large language models has become increasingly accessible thanks to parameter-efficient methods like LoRA, QLoRA, and DoRA. These approaches dramatically reduce the number of trainable parameters and ease memory requirements — but they leave one major bottleneck untouched: latency.

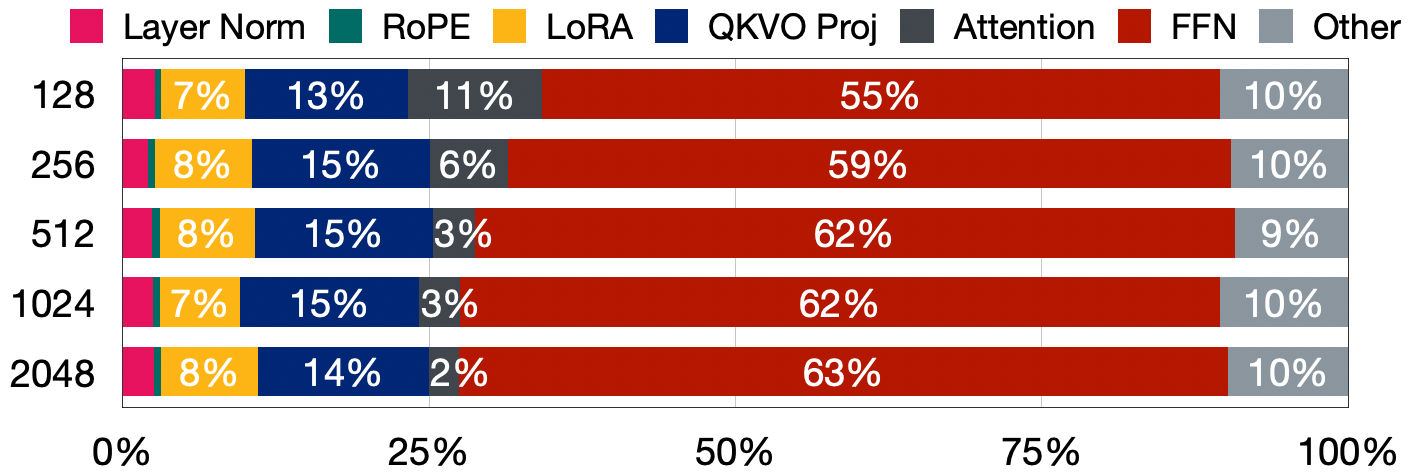

During our early experiments, we noticed something unexpected: QLoRA and DoRA were often slower than plain LoRA, despite being more memory-efficient. Profiling revealed the issue — the majority of computation was still going into the frozen base model, not the adapters.

This observation became the spark for SparseLoRA. If most of the compute happens in frozen layers, and we’re not updating them — why compute all of it?

Figure 2: Runtime breakdown of LoRA fine-tuning shows the bulk of compute is allocated to frozen base layers.

Challenging a Blind Spot in PEFT

While most of the community focused on memory bottlenecks, compute efficiency remained an overlooked frontier. Around the same time, work on contextual sparsity in LLM inference was gaining traction — demonstrating that many hidden states could be skipped based on the input.

But no one had applied that idea to fine-tuning. We started asking: could we leverage contextual sparsity not just to accelerate inference, but to actually skip unnecessary computations during training itself?

It turns out we could — but getting there took some work.

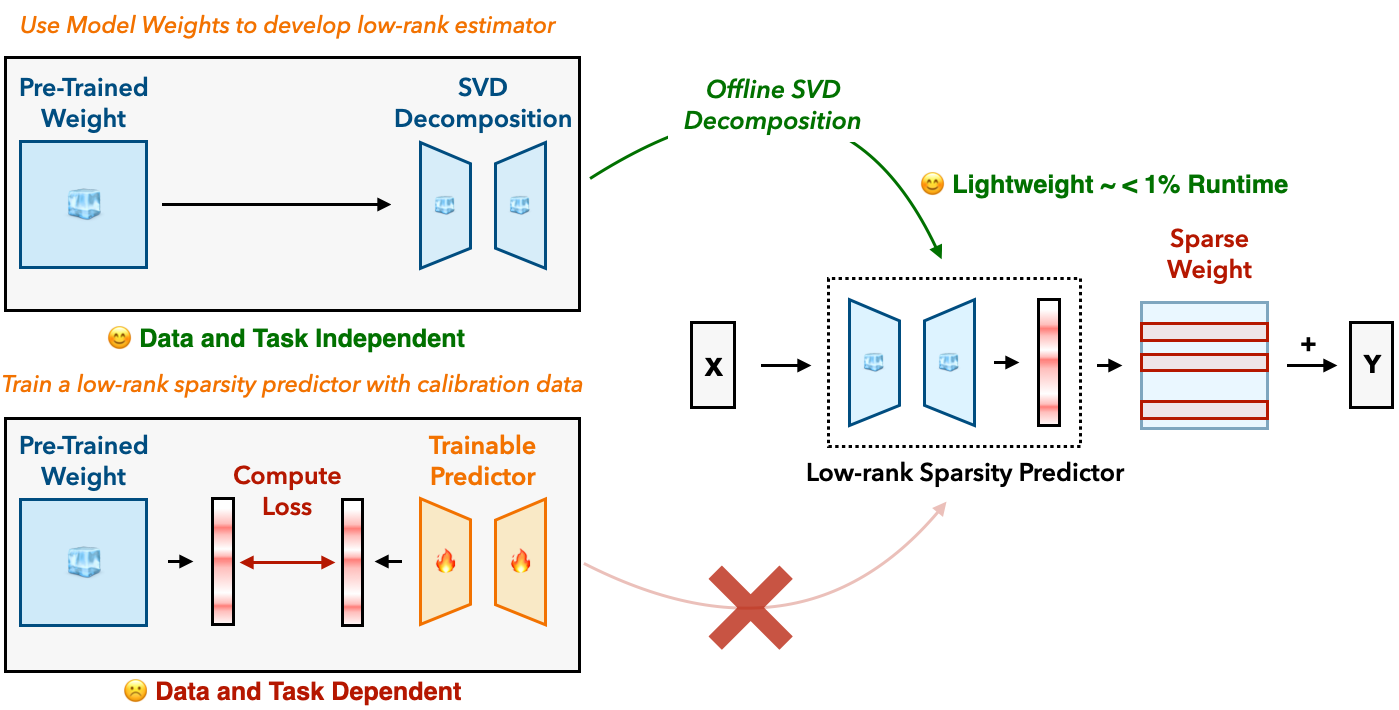

A Simple, Training-Free Approach

Rather than learning a complex predictor, we use a lightweight SVD-based sparsity estimator. It’s offline, training-free, and incredibly efficient — adding just 0.8% overhead. In contrast to heavyweight approaches that retrain or approximate gradients, SparseLoRA focuses on practical, plug-and-play speedups.

Figure 3: The SVD Predictor is task independent making it a more generalizable choice.

Pruning Metrics at a Glance

To apply contextual sparsity effectively, SparseLoRA uses simple, interpretable metrics to decide which channels to keep. These vary depending on the type of layer being sparsified:

QK Projections (Attention Input)

For query and key projections, we use a QK Norm metric. It multiplies the L2 norms of the query and key activations to estimate each channel’s importance to attention scores:

Only the top-ranked channels by s are kept — preserving the key components of the attention mechanism. This salience activation is obtained through the low-rank estimates via the SVD predictor.

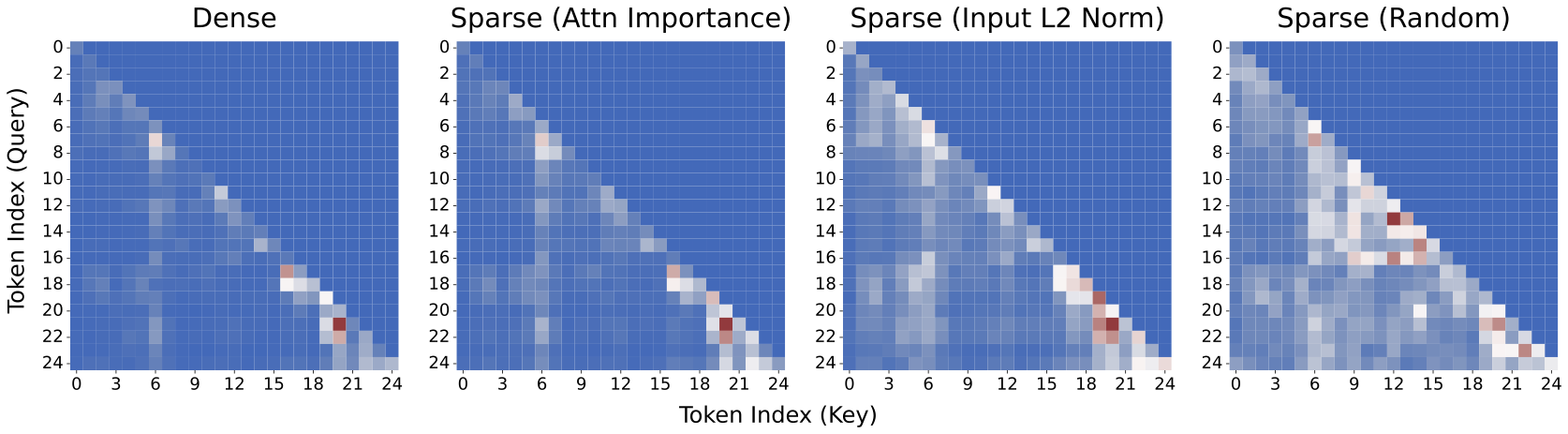

As shown below, a simple L2 Norm would not retain the same attention map characteristics:

Figure 4: Attention map visualizations for different metrics. Our metric closely resembles the dense attention map.

VO Projections (Attention Output)

For value and output projections, we apply a simple L2 norm on the low-rank estimated output of the V Projection:

Channels with higher L2 norm values are more informative and are retained. Since the V and O projection are sequential, the output channels of V, are used to prune along the input of O.

Our Journey Through Sensitivity

SparseLoRA is the result of months of sensitivity analysis. We discovered that reducing compute without hurting performance required carefully balancing sparsity across multiple axes:

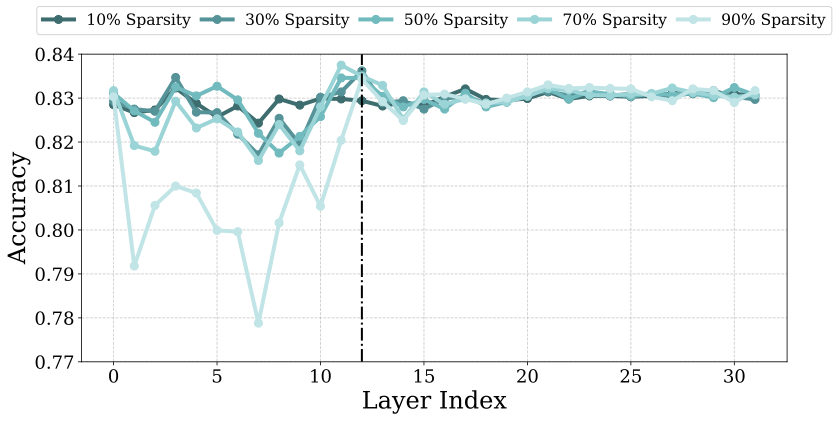

🔍 Layer Sensitivity

Different layers in large language models exhibit very different tolerance to sparsity. We found that:

- Deeper layers are often more redundant and can handle aggressive sparsification.

- Shallow layers, closer to the input, are more sensitive and should be kept denser.

Figure 5a: Accuracy drops vary across layers — deeper layers can tolerate higher sparsity.

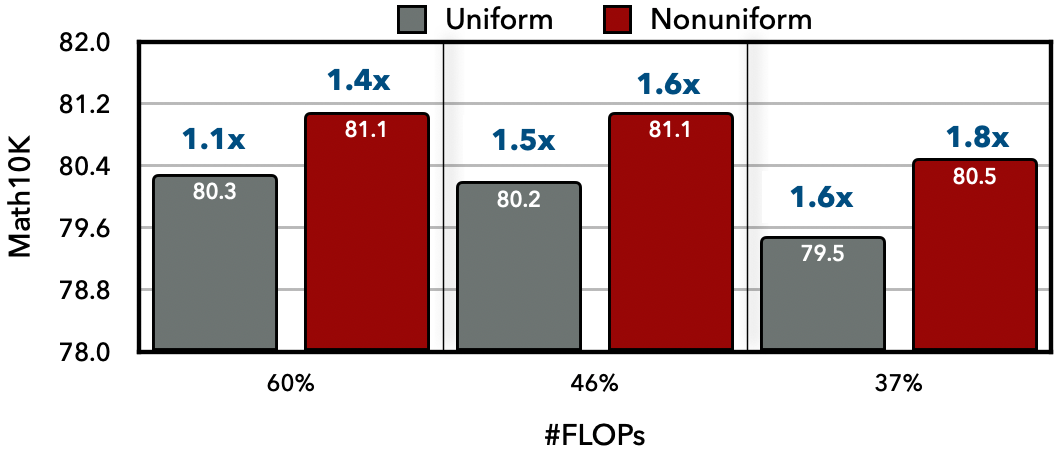

By applying non-uniform sparsity based on this per-layer sensitivity, we preserved performance while reducing compute. In fact, consolidating sparsity in specific layers can reduce the overhead and achieve higher speedups and performance at the same relative sparsity and FLOPs ratios:

Figure 5b: Accuracy of uniform and nonuniform sparsity strategies on Math10K for LLaMA3-8B-Instruct.

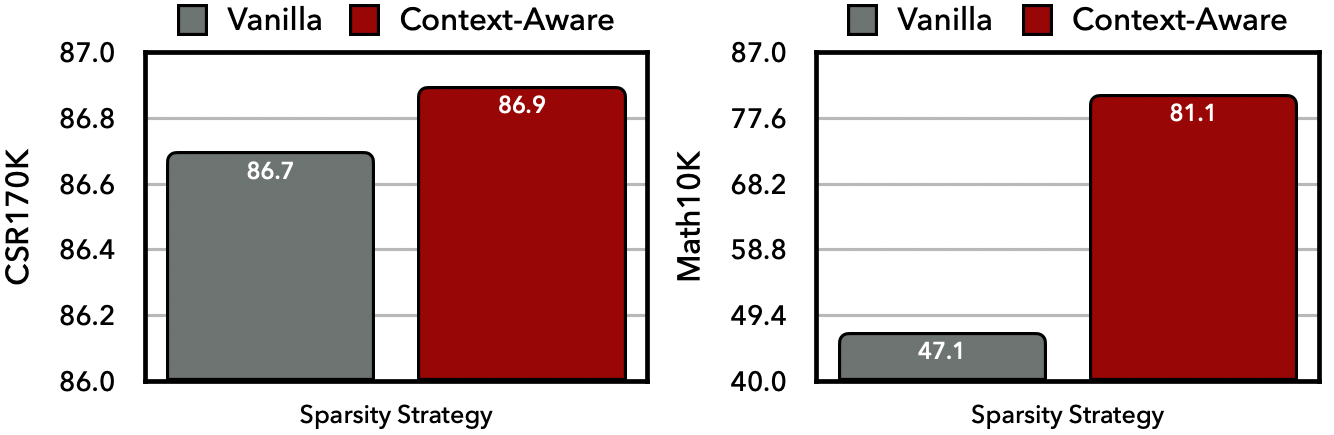

🧠 Token Sensitivity

Not all tokens should be treated equal during fine-tuning.

- Output tokens are used to compute the loss and gradients — they are highly sensitive to sparsity.

- Context tokens are less critical and can be safely sparsified.

We apply context-aware sparsity, that splits compute to focus the sparsity on context tokens — a simple trick that avoids degradation while achieving speedup.

Figure 5c: Output tokens are more sensitive to sparsity than input/context tokens, so we split the compute to preserve performance.

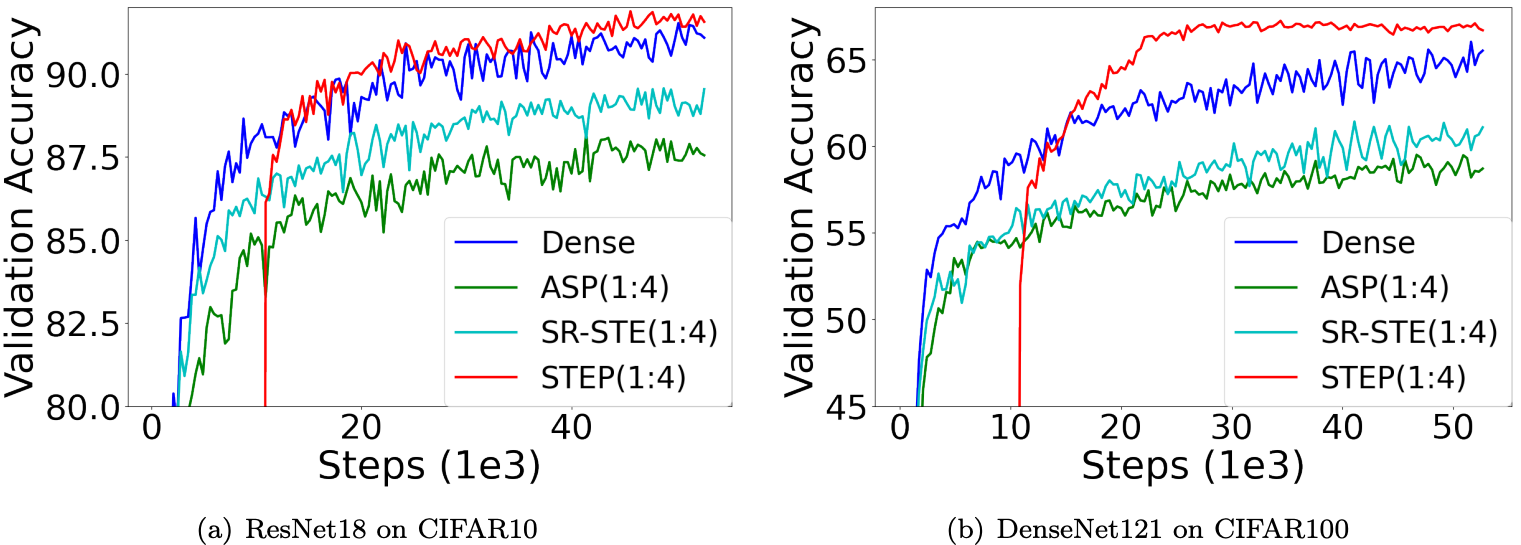

🕰️ Step Sensitivity

Early training steps are crucial for establishing good gradient flow. Many works, namely STEP, have shown that progressively introducing sparsity is better for training then introducing it in the first step.

Hence, to avoid disrupting early optimization, we fine-tune densely for the first few steps (up to 10%) before introducing sparsity. This progressive strategy balances performance and efficiency over the full training run.

Figure 5d: Figure from STEP. Offsetting the sparsity step can help improve performance.

Fine-Tuning Dynamics:

SparseLoRA can achieve relatively similar training dynamics at an accelerated pace. SparseLoRA accelerated fine-tuning for code-generation and complex instruction following by up to 1.8 over standard PEFT implementations while retaining loss convergence behavior and maintaining downstream performance.

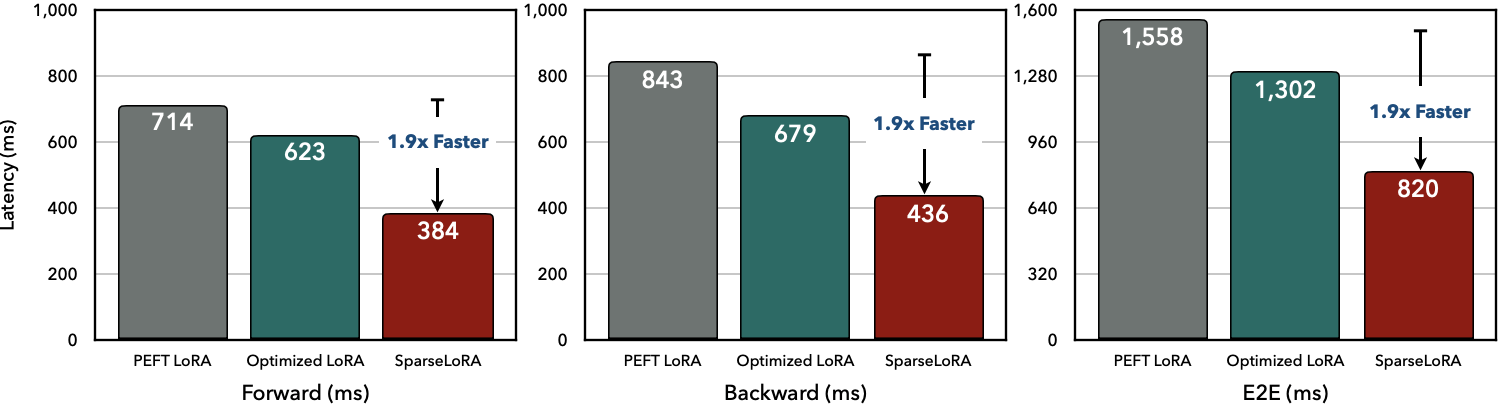

Accelerating PEFT Implementations:

The transformer’s PEFT library is widely used for fine-tuning LLMs. Applying SparseLoRA to PEFT implementations is as easy as calling our get_spft_model(). Not only does this enable sparsity in the fine-tuning process, but it also patches the base PEFT LoRA implementation to reduce overheads from components such as rotary embeddings and residual-adds. Cumulatively this brings up to a speedup in the fine-tuning process.

Figure 7: SparseLoRA brings up to 1.9× speedup on out-of-the-box LoRA PEFT implementations.

ARC-AGI

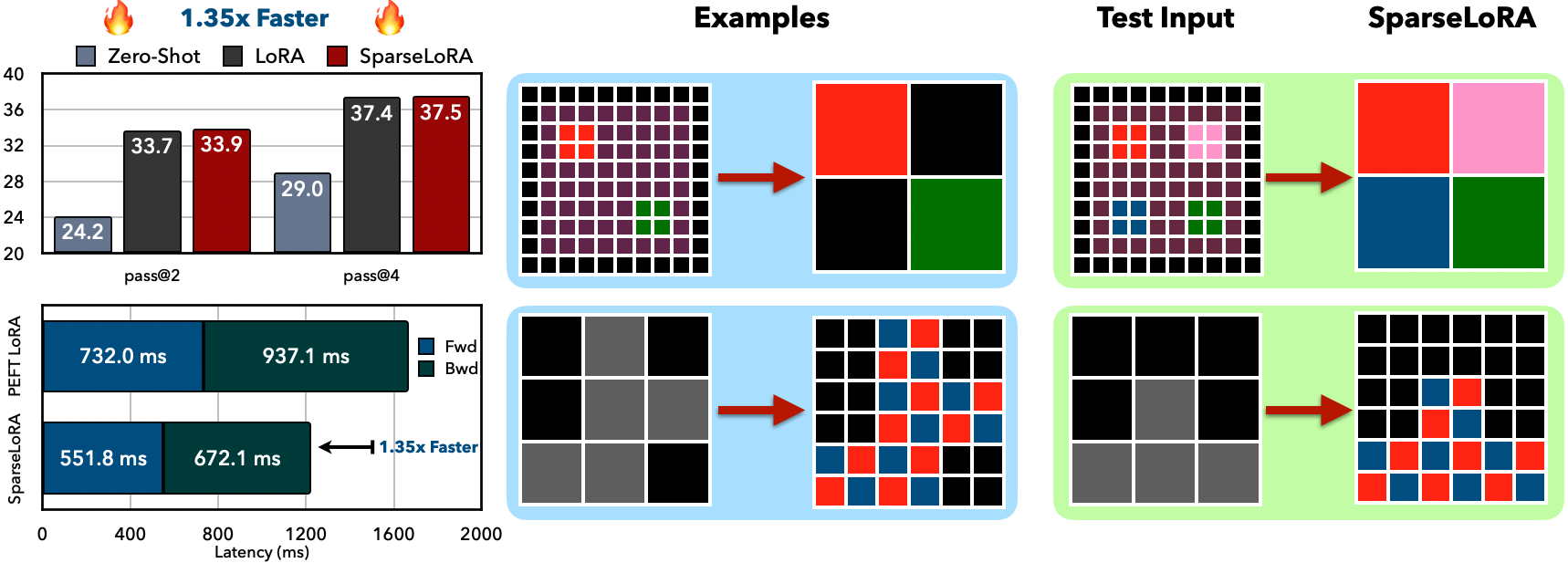

SparseLoRA easily extends to the task of test-time training, offering up to a 1.35 speedup while maintaining performance.

Figure 8: SparseLoRA shows speedup with strong performance on ARC-AGI.

Memory Efficient Fine-Tuning Support

In addition to accelerated fine-tuning, SparseLoRA can be combined with existing memory-efficient fine-tuning techniques to share in the additional memory savings.

QLoRA support:

Test out Sparse-QLoRA — a fast and memory-efficient technique of fine-tuning. For instance, to accelerate LLaMA3-8B fine-tuning with QLoRA on the Arithmetic Reasoning benchmark:

bash scripts/math10k.sh "NousResearch/Meta-Llama-3-8B-Instruct" "llama3-8b-math10k.yaml" --peft qloraUnsloth support:

For long sequence fine-tuning, Unsloth achieves impressive memory reduction and speed compared with standard gradient-checkpointing approaches. These can be further optimized with our sparsity techniques. Test out SparseLoRA + Unsloth using:

bash scripts/math10k.sh "NousResearch/Meta-Llama-3-8B-Instruct" "llama3-8b-math10k.yaml" --enable-unsloth TrueConclusion

SparseLoRA offers a practical path to faster, compute-efficient fine-tuning by introducing contextual sparsity into the training loop — something that prior PEFT methods largely overlooked. By selectively skipping computations on the frozen base layers, SparseLoRA opens new opportunities for fine-tuning methods that are both paramter and compute efficient.

Key takeaways:

- ✅ Up to 2.2 times FLOP reduction and 1.9 times measured speedup over PEFT during fine-tuning — without accuracy loss.

- 🧠 Introduces a training-free SVD-based sparsity estimator, enabling plug-and-play sparsity control per layer.

- 🔍 Systematic analysis reveals sensitivity across layers, tokens, and training stages, shaping SparseLoRA’s dynamic sparsification policy.

Acknowledgment

We would like to thank Google-BAIR Commons, Google DeepMind, and POSCO HOLDINGS for their support of this research. We are also grateful to NVIDIA for providing GPU hardware.

Citation

@inproceedings{khaki2025sparselora,

title={SparseLo{RA}: Accelerating {LLM} Fine-Tuning with Contextual Sparsity},

author={Samir Khaki and Xiuyu Li and Junxian Guo and Ligeng Zhu and Konstantinos N. Plataniotis and Amir Yazdanbakhsh and Kurt Keutzer and Song Han and Zhijian Liu},

booktitle={Forty-second International Conference on Machine Learning},

year={2025},

url={https://openreview.net/forum?id=z83rodY0Pw}

} Z Lab

Z Lab